🔍 AI coding requires better inputs and robust verification

Some great feedback on the previous post (Impressive is not always effective):

"I agree. It would be interesting, however, to compare the progression of AI code generation with that of compilers - were there star assembly-code engineers saying that compilers were just a way to brute force the generation of assembly code?"

"I feel like there is a fundamental difference — perhaps that AI always lacks the determinism of a good compiler. And it can completely ignore the best of documentation without throwing an error code."

Having lived through several of these transitions (Assembly -> C -> C++ -> Python -> Go -> Rust -> Zig -> AI ...) there are always those who prefer to stick with the current technology thinking the new adds no value or is even detrimental.

AI is more just an example in the original article as it can make an impressive mess much quicker than any human. Humans can make messes as well (made plenty myself). So the point is not so much that AI is bad (or good), but rather question: "Are values in place?" And, is there a process/system/platform (whatever you want to call it) to ensure these values happen. AI can do good work with the proper constraints and workflows, as can humans. But neither does very good work without them.

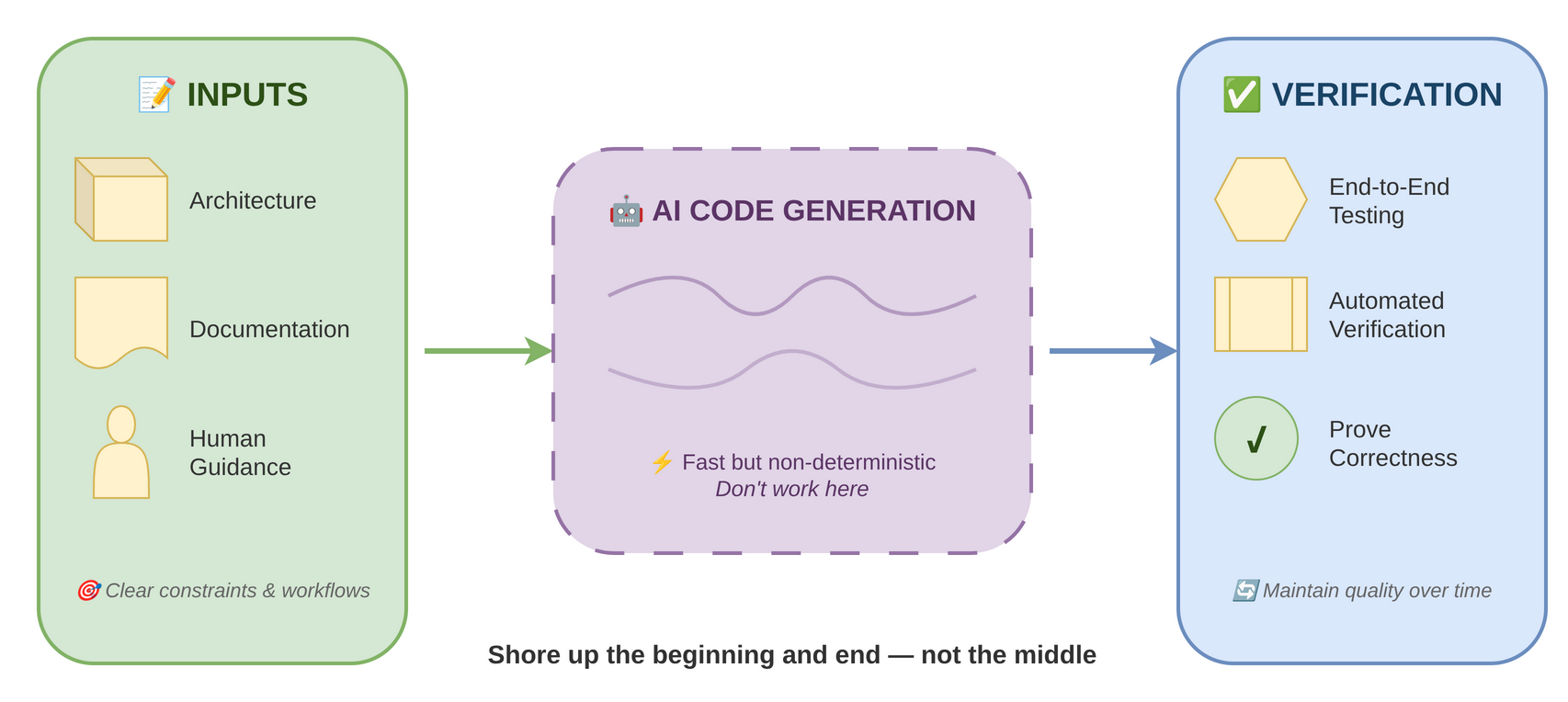

That all said, as the above feedback suggested, AI is much less deterministic than any compiler (two consecutive runs do not produce the same result), thus needs clear guidance and robust verification to ensure the end result is quality. All this can be accomplished, but needs to be thought through.

While compile warnings, linters, code review, and unit tests help ensure compiled code is quality, AI is a different beast. If humans are too slow to write the code originally, they are for sure too slow to review it. It is like reviewing machine code from compilers - at some point you need to trust the compiler. The errors from AI generated code will likely be more architectural deficiencies or logical errors at a macro level. Bad architecture can bury you in technical debt, but it takes time - initially it works fine. Thus several things are critical:

- Getting started with a good architecture. AI can help with this, but an experienced developer needs to have input.

- Good, concise documentation. As opposed to machine generated code, humans can review this.

- Automated end-to-end testing is critical. This is the only way to prove and maintain correctness.

With AI development, better inputs and more verification are critical. Trying to work in the middle of the AI coding process is futile and exhausting. The beginning and the end of the process need shored up. But this requires better workflows and more discipline. While undisciplined traditional development is a nightmare, undisciplined AI development is a train wreck.