🔗 What defines reliability?

Reliability is a common concern - this thing is critical - it needs to work!

Many things affect the reliability of a system:

- Bugs in software we write

- Bugs in 3rd party components (OSS, etc.)

- Hardware failures

- Edge condition failures (things we have not tested)

- Tolerance stack-ups

- Damage in the field

- The list goes on ...

Some common assumptions:

- Hardware is more reliable than software.

- Safety certified operating systems are more reliable than non-certified.

- US-made components are more reliable than those from other countries.

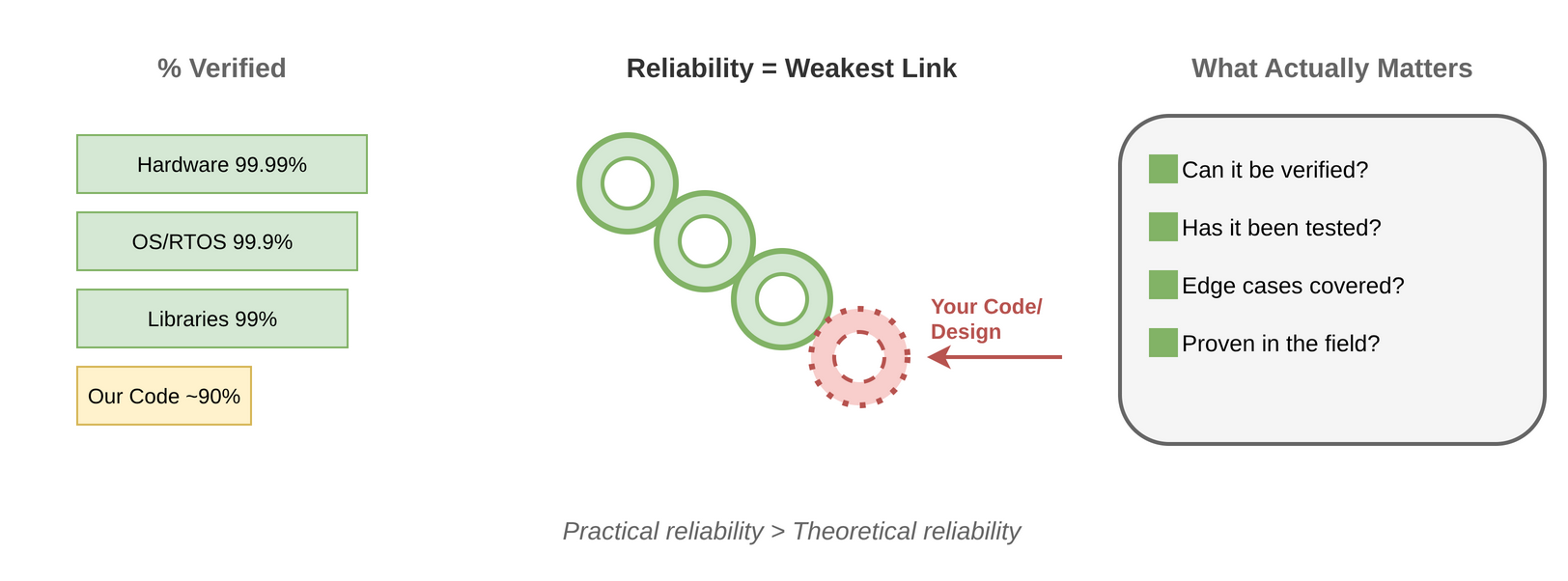

Here's the thing ... for 99% of products, most of the above does not matter. The most likely source of bugs and reliability problems is the stuff we did. If SafeRTOS is 99.999% verified and Zephyr is only 99.9% verified, it does not make much difference if the software we wrote is only 90% verified. Likewise, if the tricky analog hardware circuit has not been tested in every edge condition and verified at every combination of component tolerances, it may not be as reliable as a simpler RTOS application running in an MCU.

Practical reliability is what matters, not theoretical reliability. What defines reliability in most systems is that the design can be verified. A common MCU and RTOS (like Zephyr) has already been extensively verified and tested to be reliable in most conditions by many different people. Writing a relatively simple application on top of a complex, but verified system makes the job easier. The difficult verification work gets pushed off on someone else. This same analysis also applies to concerns like performance and security. The system is only as good as the weakest link. Reliability does not always directly correlate to system complexity, but rather what percent can be proven correct.

There are no easy answers here, but don't strain at a gnat and swallow a camel - latching onto theoretical reliability/performance/security/whatever, but ignoring the practical concerns.